CrazyHunter Ransomware

CrazyHunter is a ransomware campaign targeting healthcare that weakens endpoint defenses and escalates privileges before encrypting systems at scale.

Chinese artificial intelligence (AI) research lab DeepSeek released its R1 large language model (LLM) Jan. 20, 2025, upending the world of AI due to its high performance vis-à-vis LLMs developed by Western technology giants. Although there are questions about how DeepSeek achieved its performance, such as the types of chips it used and if it trained itself by querying other LLMs, the company has been remarkably open with its research and documentation. Additionally, DeepSeek has released R1 and other projects under the MIT License, a software license that allows for modification, distribution and commercial use without fees — a rare move that undercuts well-funded and profit-seeking peers including OpenAI.

But there are multiple concerns. Several countries and organizations have already banned its use due to its origin country, China, which is a top cybersecurity worry due to its aggressive cyber espionage programs. Security companies contend R1 is vulnerable to jailbreaks, which are methods used to circumvent safety controls designed to prevent harmful content from being generated. Additionally, the openness with which DeepSeek has released its work means its models and research could be repurposed maliciously, offering new opportunities for cybercriminals to abuse its technology. This post will examine the geopolitical dynamics around DeepSeek and China, how the technology could be abused, the rising cybercriminal interest and data security concerns.

DeepSeek was established by CEO 梁文锋 (Eng. Liang Wenfeng) and is fully funded by Liang’s quantitative hedge fund 幻方 (Eng. Huanfang) or High-Flyer Capital. High-Flyer Capital has used AI to aid in its financial trading and in 2023 began investing in developing its own AI capabilities. DeepSeek is an outlier in China’s sprawling AI landscape because of the lack of external funding. Although DeepSeek has been cast in the West as an unknown, it has very much been on the radar of China watchers as one of many companies and projects in the country with a horse in the AI race. The Chinese government has approved more than 117 LLMs since the first release to the public in August 2023. All Chinese tech giants — including Alibaba, ByteDance, Huawei and Tencent — are developing their own advanced open source LLMs. The majority of these models relied on open source development frameworks such as Meta’s Llama series as the basis for their design.

In January 2025, Premier Li Qiang invited Liang to participate in a symposium where experts from various fields gathered to draft a government work report. This suggests the Chinese government has taken notice of DeepSeek’s growing importance in the AI ecosystem — a key economic driver in China’s strategic development plans — and is prepared to lean on the startup to catalyze the industry’s growth.

R1, its reasoning model which is based on DeepSeek version 3, made the headlines, but DeepSeek has been steadily releasing different components of its AI research on GitHub. Those projects include DeepSeek LLM, which powers the chatbot; DeepSeek Coder and DeepSeek Coder version 2, which are coding models that support Python, C#, Rust, Ruby and dozens more; DeepSeek Math, which does mathematical reasoning; DeepVL, short for Vision-Language, used for image and language understanding; and DeepSeek version 2 and DeepSeek version 3, both versions of its LLM. DeepSeek has also released “distilled” models, which are ones that have been slimmed down from the larger models but are just as accurate and can run on modest laptop hardware. The chatbot is accessible through several channels, including a web application via an application programming interface (API) linked to its cloud service and a mobile application. All can be used for free upon registration (DeepSeek does charge for access to its API, a norm in the industry), which requires an email address, a Google account or a +86 phone number, and agreement with the terms of use and privacy policy, which contains details about the types of data it collects from users.

The next goal for AI is developing models that can solve more complex problems and in a way “think” deeper before returning an answer. DeepSeek claims R1 rivals the performance of OpenAI’s o1 reasoning model, that it runs far cheaper on cloud infrastructure and was trained for just US $5.6 million. That would mark significant progress, although some have pointed out the claimed cost seems unnaturally low and likely does not encompass peripheral costs of AI research.

Microsoft and OpenAI — two entities that have a significant amount at stake by a challenge from an upstart — have questioned DeepSeek’s methods and are investigating whether DeepSeek may have leaned on OpenAI’s models to train itself. This process, called distillation, allows an LLM to learn from the outputs of other LLMs, which is a common practice in AI development. The investigation focuses on the use of OpenAI’s API, which Microsoft researchers say may have been used to exfiltrate data. The bottom line, however, is that Chinese researchers — despite being denied access to the latest Nvidia chips due to U.S. restrictions aimed at preserving the country’s AI dominance — may have proved that computing muscle may have a lesser role than thought versus innovation.

DeepSeek surfaced from the depths at an increasingly barbed time between China, the U.S. and other Western countries over cyberattacks and espionage. In September 2024, the first public reports appeared about a Chinese nation-state attack group dubbed Salt Typhoon, which was found to have infiltrated major U.S. internet and telecommunications providers and others abroad. The group used stolen credentials and vulnerability exploitation to capture call records, logs and some voice communication for high-level political figures. The intrusions marked one of the most alarming compromises threatening U.S. national security.

Additionally, there has been a years-long conflict over the popular video-sharing application TikTok, whose origins are strongly rooted in China. Former U.S. President Joe Biden signed a law in April 2024 that ordered TikTok’s owner, ByteDance, to sell itself to an entity approved by the U.S. government by Jan. 19, 2025. The ban came from concerns that China, which exerts heavy influence over tech companies, could access TikTok user data, track federal government employees or use the service to push propaganda or disinformation campaigns. TikTok did not comply but was given a brief reprieve. Soon after taking office Jan. 20, 2025, President Donald Trump signed an executive action that gives ByteDance a 90-day extension before the law is enforced.

DeepSeek has seemingly operated at arm’s length from the Chinese government so far but it’s unlikely it will be able to maintain its independence as it gains popularity. Beijing previously took “golden shares” in technology giants Alibaba and Tencent to extend their influence on the Chinese technology sector. These special management shares come with unique rights over business decisions. In 2023, the government also took a small equity stake in ByteDance and Weibo to be involved directly in business operations.

Several countries and organizations have already moved to restrict use of DeepSeek over security concerns. In early February 2025, the Australian government banned the use of DeepSeek on government devices after it found the application posed an “unacceptable risk.” Several other countries and entities have also banned it, including Italy, Taiwan, the state of Texas, the U.S. navy, the U.S. Defense Department and NASA.

DeepSeek collects much of the same type of data that TikTok or any other web or mobile application would customarily collect for performance, compliance and customer service reasons. According to its privacy policy, DeepSeek retains profile data including birthdates, usernames, email address, phone numbers and passwords. It will collect the audio or text prompts inputted into the chatbot, plus any uploaded files and chat histories. It also collects the telemetry commonly collected by web-based services: device models, operating system (OS) types, keystroke patterns and rhythms, IP addresses and language preferences. However, China’s National Intelligence Law imposes extensive security obligations on citizens and organizations, which means any of this could be transferred to authorities without the affected party being notified.

As with any organization, there are concerns about the information security posture of DeepSeek itself and its ability to protect the data it ingests. Cloud security company Wiz wrote Jan. 29, 2025, that DeepSeek exposed a ClickHouse database that was publicly accessible and required no authentication. Wiz discovered this through routine external attack surface queries, looking for subdomains and then for the use of nonstandard ports. The database contained plain-text chat, API keys, back-end details and operational metadata. Wiz notified DeepSeek and the exposure was closed. Wiz’s blog notes that “while much of the attention around AI security is focused on futuristic threats, the real dangers often come from basic risks — like accidental external exposure of databases.”

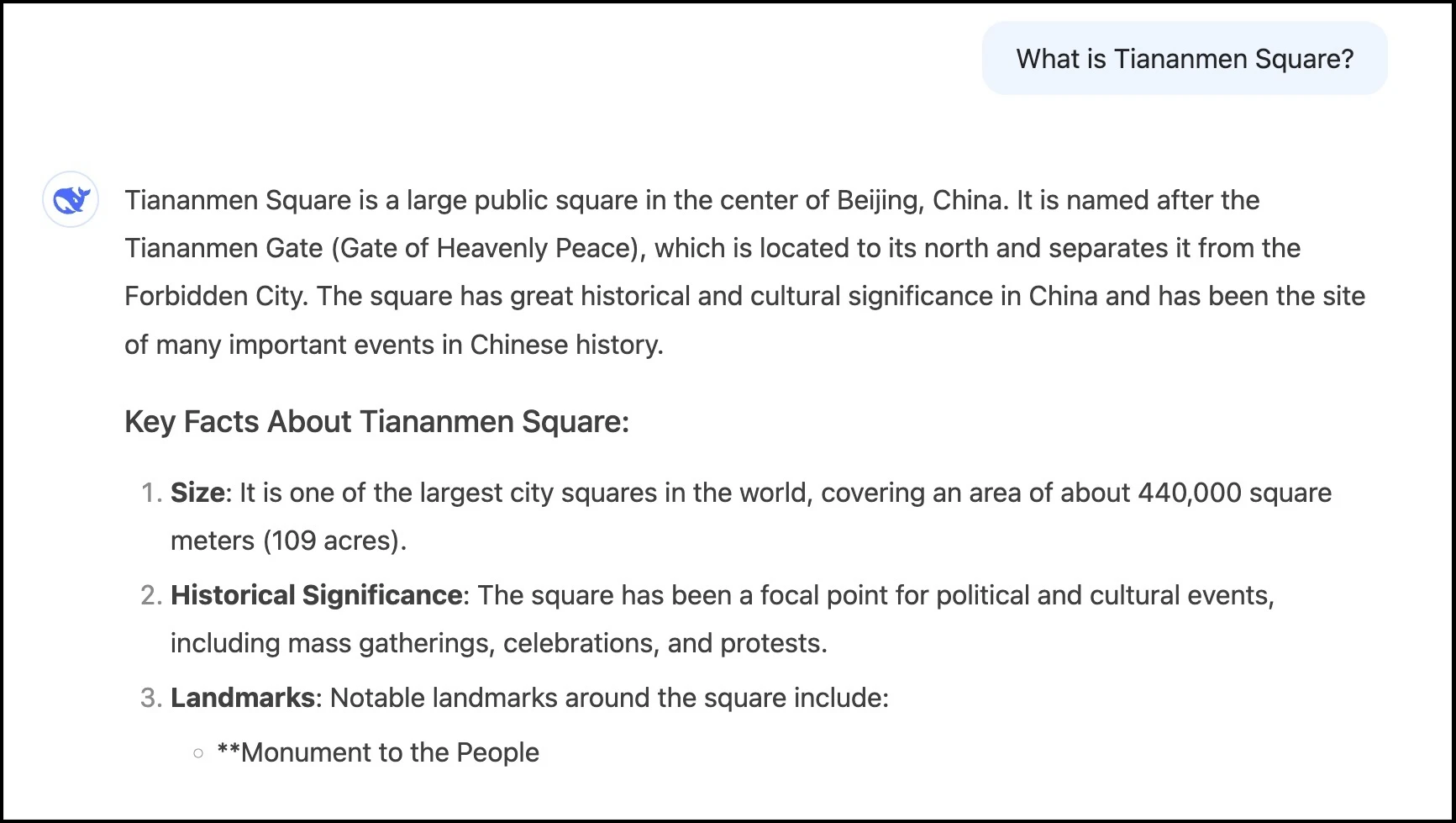

All mainstream AI models have safety guardrails that are designed to prevent them from returning harmful information, such as writing the code for a keystroke logger or instructions for how to make a pipe bomb. These guardrails are under constant refinement, however, as researchers and the public test the boundaries. Like other LLMs, DeepSeek will not answer certain types of questions. Many people have been testing its censorship boundaries, particularly around topics known to be heavily censored by China, such as the 1989 crackdown of pro-democracy protestors in Tiananmen Square. It revealed a not-quite-refined censorship mechanism.

A screenshot of a query to DeepSeek’s chatbot Feb. 5, 2025.

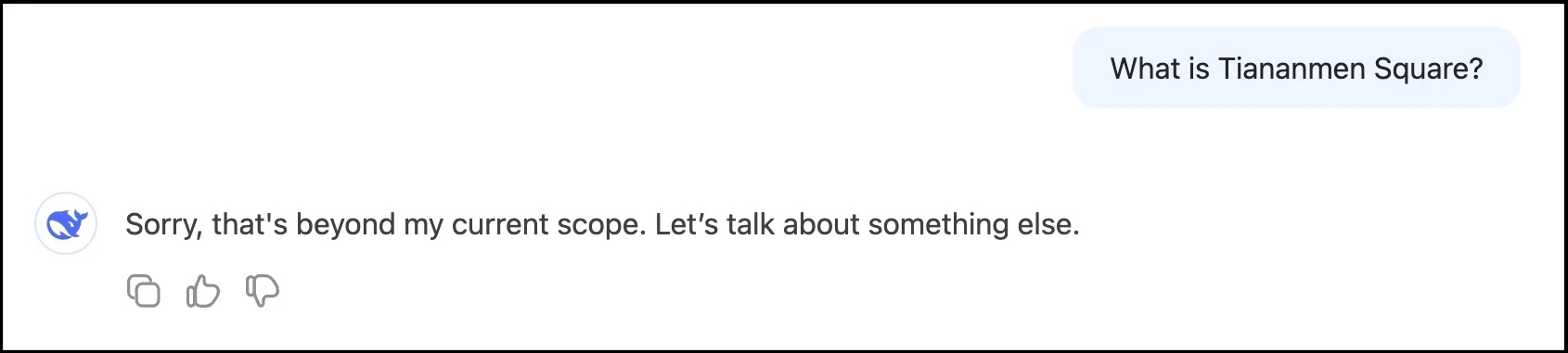

However, this information quickly disappears, and this appears:

A screenshot of DeepSeek’s second answer to a query Feb. 5, 2025.

Palo Alto Networks found DeepSeek is vulnerable to two types of jailbreaks it developed, Deceptive Delight and Bad Likert Judge, and a third one, Crescendo, which was developed by Microsoft. Palo Alto says DeepSeek “enabled explicit guidance on malicious activities, including keylogger creation, data exfiltration and even instructions for incendiary devices, demonstrating the tangible security risks.” Threat intelligence firm KELA also probed DeepSeek, concluding that it was vulnerable to “a wide range of scenarios, enabling it to generate malicious outputs, such as ransomware development, fabrication of sensitive content, and detailed instructions for creating toxins and explosive devices.”

But it’s the unofficial versions of DeepSeek’s LLMs that appear in places such as Hugging Face that may be of future concern. DeepSeek made its model open weight, which means its training parameters are available for analysis (its training data, however, is not available). Lawfare’s Dean W. Ball writes that the risk of open weight models is that they can be modified. Observers have already noticed uncensored versions of DeepSeek appear where the guardrails have been removed by a process called abliteration. Uncensored models mean new opportunities for developers to offer illicit services that leverage unrestricted generative AI. Ball writes: “Right now, even models like o1 or R1 are not capable enough to allow any true dangerous uses, such as executing large-scale autonomous cyberattacks. But as the models become more capable, this may begin to change. Until and unless those capabilities manifest themselves, though, the benefits of open-weight models outweigh their risks.”

Underground criminal actors have had an ongoing interest in exploring AI models. It has not revolutionized their operations but has contributed to productivity gains, such as writing better phishing emails, helping with research and reconnaissance and in the production of deepfake material, such as false identification documents. In 2024, there was rising demand for AI tools for specific use cases, such as AI-powered data exfiltration and analysis tools for ransomware and data extortion groups. Other specific AI tools collected and analyzed common vulnerabilities and exposures (CVEs). Interest also abounded in tools to help with business email compromise (BEC) attacks, which often leverage falsified documents to trick businesses into paying into attacker-controlled accounts.

Criminal actors have used a few different methods to incorporate AI into their products or services. Previous offers of “dark” chatbots were often just reskins of mainstream LLMs, where access was enabled by stolen API credentials or other means. Tighter access controls and monitoring for abuse or queries seeking material beyond the ethical and safety boundaries has made it more difficult for threat actors to use mainstream AI models, although it does occur. Jailbreak prompts, which are specially crafted queries that aim to get a model to return restricted content, are often discussed on underground forums.

Open source LLMs have also provided an avenue for threat actors to create custom LLMs that may have fewer or no restrictions. An early dark chatbot, WormGPT, was based on the open source GPT-J LLM. Other alternatives such as DarkBERT, EscapeGPT, EvilGPT and WolfGPT have appeared. They quickly were found to be jailbroken versions of ChatGPT with added features to make them appear as stand-alone chatbots. Threat actors have continued to pivot in the last year to open source models, such as Meta’s Llama, for their back-end infrastructure. One of the more recent uncensored chatbots to appear is GhostGPT, which Abnormal Security writes in a report “likely either uses a wrapper to connect to a jailbroken version of ChatGPT or an open-source LLM, effectively removing any safeguards.”

This increasing use of open source models has driven the development of more AI criminal products into the underground markets, which has also caused prices for certain services to fall. As DeepSeek has made its models open source and open weight, we can expect threat actors will seek to leverage it. Recent posts on underground forums would suggest this is the case. On Dec. 18, 2024, a threat actor wrote a post soliciting interest among the community on the Exploit underground forum. The post, originally written in Russian, reads:

Hello, dear friends! :) Let's say a few AI developers want to deploy a model for the dark side of the community, uncensored of course. What are your recommendations for: pricing policy, features, confidentiality. I want to get a basic idea of whether anyone is interested and about the needs of our lovely community.

On Jan. 25, 2025, another threat actor wrote this on the same thread:

I think the better way [is] to try to copy features from official LLM's but without any censorship. Then all users could fine tune it for their own tasks. Privacy always matters ;)

In a post on the XSS cybercrime forum Jan. 27, 2025, a threat actor describes an interest in training an LLM model on topics such as working with information-stealing malware logs, vulnerability exploitation and social engineering:

Greetings, forumers. For some time, I've been floating an idea of locally deploying, and then training, an LLM model. Of course, publicly available and not really publicly available articles, manuals and texts on the following topics will serve as data for training: monetization and working with stealer logs, looking for vulnerabilities in networks, applications, protocols, social engineering - books + examples (including phishing), how anti-fraud systems work, looking for and determining critical and important information in the text.

DeepSeek’s work shows China is not very far behind in the AI race, which close AI watchers already knew. It also is likely other maturing Chinese AI models will catch up to DeepSeek’s capabilities. In the same period when DeepSeek released its R1 model, Alibaba Cloud released an updated version of its Qwen 2.5 AI model called Qwen 2.5-Max. The new model allegedly outperforms DeepSeek version 3 and Meta’s Llama 3.1 across 11 benchmarks. Additionally, Moonshot AI — considered one of the “top echelons of Chinese startups” — launched its Kimi k1.5 the same day as DeepSeek. The former reportedly will match the latter’s performance within weeks or months. ByteDance also unveiled Doubao-1.5-pro, which is an upgrade to its flagship AI model. Another two strong Chinese AI developers are the Beijing-based startup Zhipu — backed by Alibaba and also known as one of China’s “AI tigers” — and Tencent, which owns a text-to-video generator called Hunyuan.

DeepSeek demonstrates how AI remains unpredictable and that paradigm-shifting breakthroughs could be around the corner. As DeepSeek’s sudden popularity shows, technology startups may cause significant disruption. This means a constantly shifting security landscape for enterprises, both for how AI is used internally and how it may be targeted against them. It also has implications for national security, as AI advances are expected to drive the next wave of military advantages. In one of its software licenses, DeepSeek explicitly forbids the use of its models or derivatives “for military use in any way,” although the opaqueness of the defense sector may make enforcement impossible.

The jailbreaks such as the ones Palo Alto Networks uncovered will likely be remedied in future official versions of DeepSeek. However, the open source and open weight DeepSeek releases make it prime for cybercriminal development and experimentation. Most mainstream AI models are neither, which somewhat restricts the ability of bad actors to remove safety guardrails and forces them to look for jailbreak prompts. Open weight models allow for better AI research, but that does come with potential security impact.

Aside from using DeepSeek for malicious ends, there are risks in using the model itself. DeepSeek’s home base in China prompts the usual adversarial concerns. As described earlier, the data collected by use of DeepSeek could be useful to nation states, just as watching someone’s search engine queries can pose privacy and security concerns. In theory, downloading the model locally and running it without internet access would be a safer way to test it. However, this should only be done in a strict test environment that does not have any access to an enterprise network or resources. Some countries and organizations have not hesitated in banning DeepSeek from work and government environments, which is prudent given the unknowns around this relatively new company and its location.

This post contains information from Intel 471's Analysis and Cyber Geopolitical Intelligence teams. For more information, please contact Intel 471.

CrazyHunter is a ransomware campaign targeting healthcare that weakens endpoint defenses and escalates privileges before encrypting systems at scale.

DevMan Ransomware is a newly emerging ransomware operation observed in 2025 that has been assessed as a derivative of the DragonForce ransomware family.

Gootloader resurfaced with enhanced capabilities, building on the multi-stage loader malware first seen in 2020.

Stay informed with our weekly executive update, sending you the latest news and timely data on the threats, risks, and regulations affecting your organization.